Top eight Best Web Scraping Tools In 2020

Content

The 10 Best Data Scraping Tools And Web Scraping Tools

Based on it, you can survey the instruments that match your necessities and finances. Don’t overlook to contemplate customer service while finalizing the tool. Web scraping is a strong method to fetch such data and assist you to leverage it for reputation monitoring. You can simply get hold of the info you want and get began with actual-time analytics. With the help of a sturdy internet scraping device, you possibly can monitor MAP compliance continually and easily.

Scraper Api

Web scraping seems for the information they want and places it into a usable format. A key a part of the post-manufacturing process is guaranteeing that retailers are meeting minimum price necessities. However, these with an enormous distribution simply can't manually go to each single website constantly.

Scrapesimple

Use text mining with FindDataLab to get word frequency distributions for queries you're excited about. Find out what number of times your product's name or a specific matter seems in search engine outcomes by using FindDataLab's Google search information scraper.

Blockchain and Cryptocurrency Email List for B2B Marketinghttps://t.co/FcfdYmSDWG

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Our Database of All Cryptocurrency Sites contains the websites, emails, addresses, phone numbers and social media links of practically all cryptocurrency sites including ICO, news sites. pic.twitter.com/WeHHpGCpcF

Ready To Start Scraping?

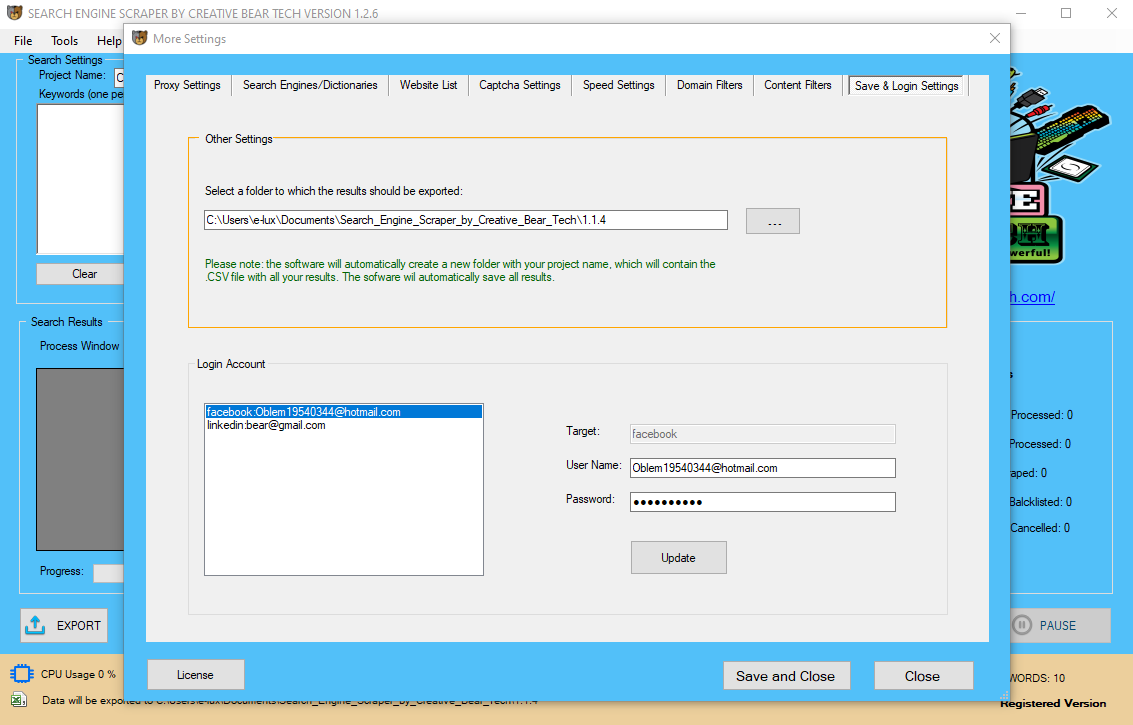

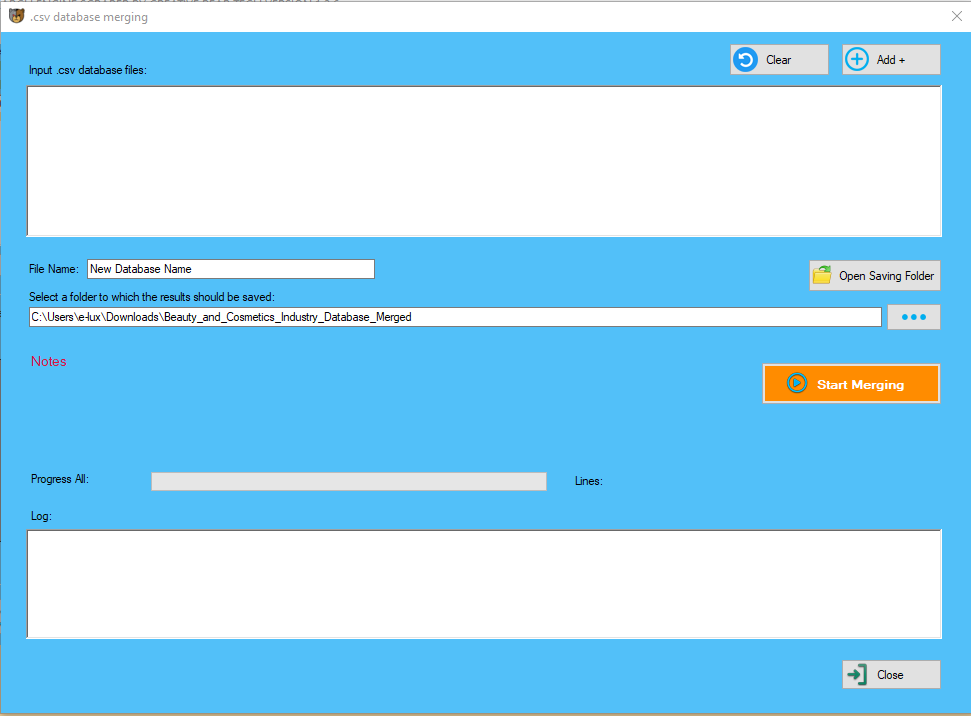

Helpful video and textual content tutorials enable you to get up to speed rapidly. Mozenda takes the trouble SEO Proxies out of automating and publishing extracted data. You even have to keep in mind whether the web scraping device has built-in delay setting and IP rotation setting with preferably a consumer-agent rotation choice as properly. If you are using a free internet scraping tool likelihood is that there might be no delay setting out there, no IP rotation and definitely no consumer-agent rotation at your disposal. If your project requires some sort of knowledge enrichment or dataset merging, you will have to take further steps so as to make it happen. This may presumably be another time sink, relying on the complexity of your internet scraping project. Another honorary point out on this class could be browser plugins that may or may not work.

Just CBD makes a great relaxing CBD Cream for all your aches and pains! Visit our website to see the @justcbd collection! ???? #haveanicedaycbd #justcbd

— haveanicedaycbd (@haveanicedaycbd) January 23, 2020

-https://t.co/pYsVn5v9vF pic.twitter.com/RKJHa4Kk0J

Scraping-bot

It lets you Integrate data into applications utilizing APIs and webhooks. Check out our web scraping service or fill within the type beneath. However, a latest ruling in favor of web scraping makes the waters even muddier. However, it is much less matured than Scrapy as Scrapy has been around since 2008 and has got higher documentation and user neighborhood. In reality, PySpider comes with some unequalled options such as an internet UI script editor. However, Scrapy doesn't render JavaScript and, as such, requires the assistance of another library. You can make use of Splash or the popular Selenium browser automation device for that. Scrapy is an internet crawling and internet scraping framework written in Python for Python builders. However, contact your lawyer as technicalities concerned would possibly make it illegal. If you are a JavaScript developer, you need to use Cheerio for parsing HTML documents and use Puppeteer to control the Chrome browser. If you plan to make use of one other programming language aside from Python and JavaScript, there are additionally instruments you should use. Social media scraping can be utilized to assemble information about users and their information. Content creators use net scraping to detect what’s trending on totally different social media platforms in order that they will create content related to the trending contents.

It lets you Integrate data into applications utilizing APIs and webhooks. Check out our web scraping service or fill within the type beneath. However, a latest ruling in favor of web scraping makes the waters even muddier. However, it is much less matured than Scrapy as Scrapy has been around since 2008 and has got higher documentation and user neighborhood. In reality, PySpider comes with some unequalled options such as an internet UI script editor. However, Scrapy doesn't render JavaScript and, as such, requires the assistance of another library. You can make use of Splash or the popular Selenium browser automation device for that. Scrapy is an internet crawling and internet scraping framework written in Python for Python builders. However, contact your lawyer as technicalities concerned would possibly make it illegal. If you are a JavaScript developer, you need to use Cheerio for parsing HTML documents and use Puppeteer to control the Chrome browser. If you plan to make use of one other programming language aside from Python and JavaScript, there are additionally instruments you should use. Social media scraping can be utilized to assemble information about users and their information. Content creators use net scraping to detect what’s trending on totally different social media platforms in order that they will create content related to the trending contents.  It is a sturdy software that you could assist to create extra reliable web scrapers. PySpider is one other web scraping tool you should use to write scripts in Python. Unlike within the case of Scrapy, it could render JavaScript and, as such, does not require the use of Selenium. Make sure to check out FindDataLab's 10 ideas for internet scraping to learn how to not get blocked amongst different suggestions in additional detail. All in all, spacing out requests is necessary so that you wouldn't overload the server and inadvertently trigger damages to the website online. Therefore, you have to particularly cautious when choosing ready-made internet scraping software program, as it could not have this feature. Request randomisation comes in after we want the net scraper to look extra human-like in its shopping habits. In essence, when you consider how you browse the net, you'll be able to come to a conclusion that you click on things, scroll and perform different activities in a random manner. Web scraping is used to observe retailer websites and ship compliance notifications again to the manufacturer without needing a human to spend their valuable time doing the exercise. Scrapinghub - Cloud-primarily based crawling service by the creators of Scrapy. It also means you can swap out individual modules with different Python internet scraping libraries. Making use of nameless proxies at all times, you barely have to be concerned about being locked out a website during an internet scraping operation. A Web Crawler as a Service (WCaaS), 80 legs it provides customers with the flexibility to carry out crawls within the cloud with out inserting the consumer’s machine under a lot of stress. Is there any software you use for web scraping that didn’t make this list? It has a big consumer neighborhood, plus different builders have built libraries for scraping sure websites with Apify which can be utilized instantly. Apify (formerly Apifier) converts web sites into APIs in fast time. Great software for developers, because it improves productivity by decreasing growth time. It has a free plan to scrape 200 pages in 40 minutes, nevertheless more advanced premium plans exist for extra complex internet scraping wants. Find the best candidates for a job opening in your company in mere seconds. As well as monitor the feedback about your organization as an employer. Aggregate reviews for companies or products from e-commerce sites. Extract their ranking and build a database of similar companies or equally rated providers. Scraping websites with strict anti-spam techniques is a troublesome task as you must cope with a good variety of obstacles. ScrapingAnt might help you deal with the entire obstacles and get you the required information you should scrape effortlessly. Aside from IP rotation, Scraper API also handles headless browsers and will help you avoid coping with Captchas directly. This internet scraping API is quick and reliable, with a good number of Fortune 500 corporations on their customer’s list. With over 5 billion API requests dealt with every month, Scraper API is a drive to reckoned with within the net scraping API market.

It is a sturdy software that you could assist to create extra reliable web scrapers. PySpider is one other web scraping tool you should use to write scripts in Python. Unlike within the case of Scrapy, it could render JavaScript and, as such, does not require the use of Selenium. Make sure to check out FindDataLab's 10 ideas for internet scraping to learn how to not get blocked amongst different suggestions in additional detail. All in all, spacing out requests is necessary so that you wouldn't overload the server and inadvertently trigger damages to the website online. Therefore, you have to particularly cautious when choosing ready-made internet scraping software program, as it could not have this feature. Request randomisation comes in after we want the net scraper to look extra human-like in its shopping habits. In essence, when you consider how you browse the net, you'll be able to come to a conclusion that you click on things, scroll and perform different activities in a random manner. Web scraping is used to observe retailer websites and ship compliance notifications again to the manufacturer without needing a human to spend their valuable time doing the exercise. Scrapinghub - Cloud-primarily based crawling service by the creators of Scrapy. It also means you can swap out individual modules with different Python internet scraping libraries. Making use of nameless proxies at all times, you barely have to be concerned about being locked out a website during an internet scraping operation. A Web Crawler as a Service (WCaaS), 80 legs it provides customers with the flexibility to carry out crawls within the cloud with out inserting the consumer’s machine under a lot of stress. Is there any software you use for web scraping that didn’t make this list? It has a big consumer neighborhood, plus different builders have built libraries for scraping sure websites with Apify which can be utilized instantly. Apify (formerly Apifier) converts web sites into APIs in fast time. Great software for developers, because it improves productivity by decreasing growth time. It has a free plan to scrape 200 pages in 40 minutes, nevertheless more advanced premium plans exist for extra complex internet scraping wants. Find the best candidates for a job opening in your company in mere seconds. As well as monitor the feedback about your organization as an employer. Aggregate reviews for companies or products from e-commerce sites. Extract their ranking and build a database of similar companies or equally rated providers. Scraping websites with strict anti-spam techniques is a troublesome task as you must cope with a good variety of obstacles. ScrapingAnt might help you deal with the entire obstacles and get you the required information you should scrape effortlessly. Aside from IP rotation, Scraper API also handles headless browsers and will help you avoid coping with Captchas directly. This internet scraping API is quick and reliable, with a good number of Fortune 500 corporations on their customer’s list. With over 5 billion API requests dealt with every month, Scraper API is a drive to reckoned with within the net scraping API market.

- Sequentum (Content Grabber) is a knowledge scraping software that automatically collects such content material elements as catalogs or net search outcomes.

- However, if you understand full features and benefits of the completely different tools, you can make net scraping a fruitful and pleasant expertise!

- You can positively develop your small business by utilizing these net-page scraping instruments.

It offers a browser-based mostly editor to arrange crawlers and extract knowledge in actual-time. You can save the collected information on cloud platforms like Google Drive and Box.web or export as CSV or JSON. Import.io uses chopping-edge technology to fetch millions of knowledge every single day, which businesses can avail for small charges. Along with the online tool, it also offers a free apps for Windows, Mac OS X and Linux to construct data extractors and crawlers, obtain knowledge and sync with the net account. Import.io provides a builder to type your individual datasets by merely importing the info from a selected internet page and exporting the information to CSV. HarvestMan is the one open supply, multithreaded net-crawler program written within the Python language. HarvestMan is released under the GNU General Public License.Like Scrapy, HarvestMan is actually versatile however, your first installation wouldn't be simple. On the other hand, complicated actions can be defined by combining existing ones, utilizing variables, and incorporating JavaScript code. This makes Helium Scraper a very versatile net scraper, that may be simply configured to extract from easy web sites, but may also be adjusted to handle extra advanced situations. Octoparse is a strong, but free internet scrapper with a wealth of comprehensive features. It is very useful because it gives totally different views on each of the tools. Apart from these Scrapebox is my all time favorite, it has many addons. At only one time fees these 2 instruments might save plenty of time and money. These tools are useful if you have to extract data from the search engine.  This is as a result of the knowledge been scraped is publicly available on their website. Before scraping any web site, do contact a lawyer because the technicalities concerned may make it unlawful. Just ship your request to the API URL with the required knowledge, and also you’ll get again the information you require. However, its restrictive nature leaves builders with no alternative than to internet scrape. The request limit and restriction to sure content are why individuals interact in net scraping. With 80 legs, you solely pay for what you crawl; it additionally provides easy to work with APIs to assist make the lifetime of developers simpler. Dexi doesn’t simply work with web sites, it may be used to scrape knowledge from social media sites as nicely. Scraper is a free device, which works right in your browser and auto-generates smaller XPaths for outlining URLs to crawl. It doesn’t presents you the ease of automatic or bot crawling like Import, Webhose and others, however it’s additionally a benefit for novices as you don’t must deal with messy configuration. You can easily gather and handle net information with its easy level and click on interface. This net scraping software enables you to reduce cost and saves precious time of your group. This web scraping software helps you to form your datasets by importing the data from a particular internet web page and exporting the information to CSV.

This is as a result of the knowledge been scraped is publicly available on their website. Before scraping any web site, do contact a lawyer because the technicalities concerned may make it unlawful. Just ship your request to the API URL with the required knowledge, and also you’ll get again the information you require. However, its restrictive nature leaves builders with no alternative than to internet scrape. The request limit and restriction to sure content are why individuals interact in net scraping. With 80 legs, you solely pay for what you crawl; it additionally provides easy to work with APIs to assist make the lifetime of developers simpler. Dexi doesn’t simply work with web sites, it may be used to scrape knowledge from social media sites as nicely. Scraper is a free device, which works right in your browser and auto-generates smaller XPaths for outlining URLs to crawl. It doesn’t presents you the ease of automatic or bot crawling like Import, Webhose and others, however it’s additionally a benefit for novices as you don’t must deal with messy configuration. You can easily gather and handle net information with its easy level and click on interface. This net scraping software enables you to reduce cost and saves precious time of your group. This web scraping software helps you to form your datasets by importing the data from a particular internet web page and exporting the information to CSV.

Record your actions as soon as, navigating to a specific web page, and getting into a search time period or username the place appropriate. Especially helpful for navigating to a specific inventory you care about, or marketing campaign contribution knowledge that’s mired deep in an company web site and lacks a unique Web address. Can also help convert Web tables into usable knowledge, however OutwitHub is actually more suited to that function. You can at all times start with free net scraping software program or plugins, but remember that nothing is completely free. You might luck out and be able to perform easy internet web page scraping duties or you would download software that's not practical in the most effective situation and malware in the worst situation. Perform net scraping in real-time, for example, by scraping financial information, such as scraping inventory market knowledge and their modifications. One of the online scraping uses is also scraping sports outcomes pages for real-time updates. Scrape a number of job boards and firm web sites to combination information a couple of specific specialty. You can easily scrape thousands of web pages in minutes with out writing a single line of code and build a thousand+ APIs based on your requirements. If you're into on-line shopping and like to actively track costs of products you are in search of across a number of markets and online shops, then you definitely positively want an online scraping software. Yes, internet scraping is legal, although many websites do not assist it. Its system is quite practical and might help you handle a great variety of duties, including IP rotation utilizing their very own proxy pool with over 40 million IPs. AutoExtract API is among the greatest internet scraping APIs you will get available in the market. It was developed by Scrapinghub, the creator of Crawlera, a proxy API, and lead maintainer of Scrapy, a popular scraping framework for Python programmers. At the end of 2019 a courtroom dominated that scraping LinkedIn's publicly obtainable data is authorized. The case of hiQ vs. LinkedIn has major implications in regard to privateness. It implies that data entered by customers in a social media site, especially information that has been out there for the general public to see, doesn't belong to the location owner. For instance, if we're attempting to scrape names and e-mails from a particular web site as a way of producing leads, we need to get hold of consent from each lead in order for it to be allowed underneath the GDPR. Tell Mozenda what knowledge you want as soon as, after which get it nevertheless incessantly you need it. Plus it permits superior programming using REST API the consumer can connect directly Mozenda account.

Canada Vape Shop Database

— Creative Bear Tech (@CreativeBearTec) March 29, 2020

Our Canada Vape Shop Database is ideal for vape wholesalers, vape mod and vape hardware as well as e-liquid manufacturers and brands that are looking to connect with vape shops.https://t.co/0687q3JXzi pic.twitter.com/LpB0aLMTKk

ParseHub is a powerful device that allows you to harvest information from any dynamic website, without the need of writing any internet scraping scripts. One of the great thing about dataminer is that there's a public recipe record that you could search to hurry up your scraping. Parsehub is a web scraping desktop utility that allows you to scrape the web, even with difficult and dynamic web sites / scenarios. Goutte offers a pleasant API to crawl websites and extract knowledge from the HTML/XML responses. Dexi intelligent is a web scraping software lets you transform unlimited internet information into instant business worth. If you realize what information you need, it's attainable to filter it out from the "soup" by using a selector. The two major ways of choosing web page objects are by utilizing CSS selectors or XPath navigation. All in all, a extra strong means of choosing gadgets in a page is through the use of CSS selectors. Nobody writes code in a vacuum, so there may come a time the place you have to ask for recommendation or talk about your internet scraper's code to someone. Since extra individuals are familiar with and use CSS selectors in their every day work, it will be easier to debug and preserve your code by both hiring help or asking for advice. Web scraping makes your task easier by getting the mandatory information in an automatic data and in a lightning quick way. When it comes to making use of exterior knowledge and information to intelligent automation, Dexi is little question probably the greatest tools within the trade. For a cloud-based self serve net web page scraping platform, Mozenda has unrivalled out there. For occasion, if you should insert Selenium for scraping dynamic net pages, you can do that (see instance). HTML Scraping with lxml and Requests – Short and candy tutorial on pulling a webpage with Requests after which using XPath selectors to mine the specified data. This is extra newbie-friendly than the official documentation. Among all the Python web scraping libraries, we’ve enjoyed utilizing lxml the most. This is why, in order to not appear as suspicious exercise within the web server logs, we are able to use the python's Random module and make the web scraper time-out at random intervals. With the freemium or free-to-paid internet scraping software program model, you'll still need to pay ultimately. Especially, should you're trying to scale your web scraping project. Therefore, a valid question is why not pay from the beginning and get a completely customized product with anti-blocking precautions already carried out, and release your treasured time? Web scraping is being utilized by advertising and digital teams to aggregate content material in a more useful way. With hundreds of thousands of articles being posted online daily, it has turn out to be impossible for traditional teams to be aware of every thing that is occurring. The beneath is the record of greatest web scraping providers recognized by QuickEmailVerification by way of ongoing exhaustive evaluation. You will need to, for instance, hire a technical person to continually monitor your scraper and have to have a big data storage facility. With greater than 7 billion pages scraped, Mozenda continues to serve enterprise clients from across the globe. ProWebScraper rotates IP addresses with every request, from a pool of tens of millions of proxies across over a dozen ISPs, and automatically retries failed requests, so you will by no means be blocked. You can use chaining performance of prowebscraper which might help you to retrieve all the element web page knowledge at the identical time. HarvestMan is an internet crawler utility written in the Python programming language. HarvestMan can be used to download information from web sites, in accordance with numerous user-specified rules. The newest model of HarvestMan helps as much as 60 plus customization choices. Scrapy is a full framework, and as such, it comes with every thing required for web scraping, including a module for sending HTTP requests and parsing out information from the downloaded HTML page. Puppeteer is a Node-based headless browser automation tool typically used to retrieve information from websites that require JavaScript for displaying content material. Using an API is means simpler than Web Scraping as you need to think about the peculiarities of an internet site and how its HTML is written. Some contents are hidden behind JavaScript, and you have to put this into consideration too. VisualScraper is available in free as well as premium plans starting from $forty nine per 30 days with access to 100K+ pages. Its free software, similar to that of Parsehub, is out there for Windows with extra C++ packages. Scrapinghub converts the complete internet page into organized content material. Its group of consultants are available for assist in case its crawl builder can’t work your necessities. Its basic free plan offers you entry to 1 concurrent crawl and its premium plan for $25 per 30 days supplies entry to as much Selenium Scraping as 4 parallel crawls. CloudScrape supports knowledge assortment from any website and requires no download just like Webhose. In reality, most net scraping tutorials use BeautifulSoup to show newbies how to write internet scrapers. When used together with Requests to send HTTP requests, web scrapers turn out to be easier to develop – much easier than using Scrapy or PySpider. Requests is an HTTP library that makes it easy to ship HTTP requests.